Exploring the value of academic research in journalism

Photo Credit: Flickr user eclecticlibrarian

Much has been written of late about the relatively low quality of academic research in the journalism and mass communication field. Since this is a critical time, the dawn of a new age of communication, there’s much to learn. The research gap is a major source of disagreement between professionals and scholars. Professionals argue that much research is unreadable and, frankly, useless. If you take the time, scholars counter, you’ll find important insights.

Why do we care about research? It’s important to the future of journalism education because publication in the so-called peer-reviewed journals traditionally has been the number one criteria for faculty promotion and tenure. Yes, research beats teaching.

In the professional world, journalism that makes a difference is measured by actual impact — by the jailed people who are freed, by the criminals who are jailed, by new laws or policies that save lives or stop government waste. This “community service” (as it is called) is not given the importance it deserves at universities. Publishing in academic journals is what counts, even if it does nothing to further how journalism serves America. (See Geneva Overholser’s blog about “what’s missing” in the debate about journalism schools.)

Let’s look at the details: Three main journals boast the word “journalism” in their titles. Citation research, the tracking of how often scholars quote each other, paints a grim picture of these three. None of the three is considered among the most cited or prestigious of the journals in the communications field, nor in the social studies field at large.

For this comparison we used the helpful databases built by Thomson Reuters, which tracks thousands of journals and citations. The three journals in question all are published by the Association for Education in Journalism and Mass Communication: Journalism & Mass Communication Quarterly, Journalism & Mass Communication Educator and Journalism & Communication Monographs.

Of the three, only Quarterly has been selected for inclusion in the “Web of Science” database, and to receive a Journal Impact Factor in Journal Citation Reports. Educator was rejected in January 2010 but is up for re-evaluation in January 2013. Monographs is currently under evaluation. Having only one of the three “journalism-titled” journals in the database is not a good start.

To qualify for the database, Thomson Reuters considers: 1. The journals’ publishing standards 2. Editorial content 3. International diversity and 4. Correct metadata. A journal that has never been cited, for example, would not be picked up by Thomson Reuters.

We checked the Quarterly against all the communication journals in the dataset. Given how much it produces, how much was it cited in 2011? The Journal Impact Factor ranked Quarterly 48 of the 72 communication journals. Considering the importance journalists place on their profession — “bedrock of democracy” – being in the bottom 50 percent would not sit well. Of the 2,943 social science journals, Quarterly ranks 1,950, according to impact measure. (The Journal Impact Factor, Thomson Reuters says, can be “used to provide a gross approximation of the prestige of journals to which individuals have been published.”)

Is there a conspiracy against communication journals? Do social scientists simply not like journalism or communication? Hardly. Cyberpsychology, Behavior and Social Networking (number 1 out of 72 communication journals when ranked by Journal Impact Factor) ranks in the top 10 percent of all social science journals, again using citations in 2011. Note the words cyber and social networking in the title. We desperately need to know the social science of engagement and impact in the digital age.

Another benchmark that can be used to rate journals is Google Scholar. It lists the number of times articles or publications have been cited. In our Sept 4. search, Journalism & Mass Communication Quarterly produces 7,730 results, Journalism & Mass Communication Educator produces 1,140 results and Journalism & Communication Monographs produces 284 results.

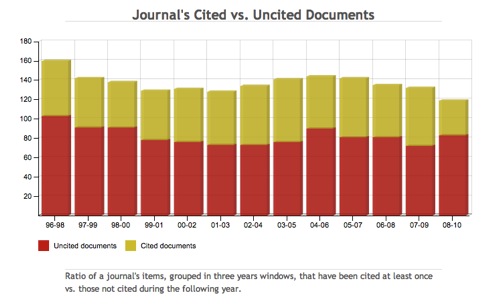

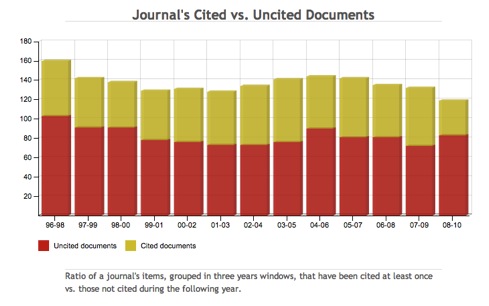

These are bad numbers when you consider that there are 7,149 full-time and 5,162 part-time professors, who should be reading and quoting each other. But they get worse when you realize that only some of the articles are cited at all. The chart below is from SCImago Journal & Country Rank, which also tracks citations. Every year, at least half of the Journalism and Mass Communication Quarterly articles are totally uncited. The latest year on record shows no citations for a whopping 69 percent of the articles. Remember, the Quarterly looks to us like the best of the three “journalism journals.”

Is it really wise to base tenure and promotion upon journal articles that are never cited? It’s difficult to imagine working journalists promoted for writing stories no one ever mentioned.

Perhaps the good research is really good. At the 2012 convention, the Association of Educators in Journalism and Mass Communication gave out a thumb drive with the “best” scholarly articles from decades of the journals. We reviewed them. Alas, for the most part, they seemed derivative, obvious or obtuse. To quote a senior journalism educator: “There are three categories of research these days: 1. Who cares? 2. No shit! 3. I don’t have any idea what you are talking about.” To be generous, perhaps we should add a category: “4. Needs more work, but there might be something there. (Or, Close But No Cigar.)”

Some of the “Research You Can Use,” listed on the AEJMC web site seemed to fit into Category 4: The social responsibility of news organizations, gatekeeping, agenda-setting and “framing” all seemed close. But in the lens of today’s explosion of social and mobile media and its attendant participatory culture, only such classics as Marshal McLuhan’s “Media is the Message” and Walter Lippmann’s “Public Opinion” seem to hold water. Yes, media gives us a picture of the world. Media types effect messages. But it’s all different now in the digital age.

Valiant educators over the years, such as Del Brinkman (formerly of Knight Foundation) and currently Michael Schudson of Columbia University, have tried to find and translate important scholarly work, and in the journalism field its tough slogging. One reason: The most quoted journalism notion in the past decade, the one media mogul Rupert Murdoch famously repeated to what was then named the American Society of Newspaper Editors, did not come from a journalism journal of any type but from Marc Prensky, who showed “digital natives” really think differently than the rest of us. Hopefully, Emily Bell of the Tow Center at Columbia will stay on those lines as she develops applied research capacity. And the great teams at Missouri, the University of North Carolina and elsewhere keep producing important work (even if it isn’t published in the journals we are writing about today).

How do the editors of the journalism journals react to being ignored, in relative terms, by other scholars? They say they aren’t marketing themselves well enough. They say they don’t get enough funding for research. They say some articles aren’t meant to be quoted (they actually created a category of these, which cuts down on the embarrassment of having more than half of the articles in a given year not cited at all.) If you mention this blog to a prominent scholar you will be regaled with the shortcomings of citation studies, just as scholars who can’t write clearly will go on ad nauseam about the short-comings of the Flesch readability test.

We wrote Dr. Daniel Riffe at the University of North Carolina, editor of the most-cited journalism journal, the Quarterly. Here’s what we asked:

1. What do you think of citation studies – specifically the Thomson Reuters impact scale — that rank the Quarterly 48 of 72 in the communications field?

2. The SCImago Journal and Country Rank, from the Scopus database, says nearly 70 percent of the articles in the Quarterly are not cited at all. If that is accurate, why is that?

3. Are journal citations in general a good measure of the quality of a journal? Why or why not?

4. What would you say to those who argue that the quality of the Quarterly and the AEJMC journals should be significantly improved? If that needs to happen, how could that be done?

5. Is there any piece of research – cited or uncited – that you think proves the value of the Quarterly in its mission of keeping up with the latest developments? Are there, for example, any of the AEJMC-cited “Research You Can Use” items that are especially illustrative?

Dr. Riffe, Richard Cole Eminent Professor at UNC/Chapel Hill, said he would answer as soon as time allows but noted his journal work is “on hold” due to the start of a new semester.

Meanwhile, let’s ponder the advice from America’s great early journalist, the writer and statesman Benjamin Franklin: “If you would not be forgotten as soon as you are dead & rotten, either write things worth reading, or do things worth the writing.” Since so many journalism and communications professionals endeavor to do the later, the least scholars can do is to try harder to do the former. Being promoted and getting a lifetime job guarantee for writing things no one cites is just un-American.

By Knight Foundation’s Eric Newton, senior adviser to the President, and Amber Robertson, special projects contractor