News and Information Disorder in the 2020 Presidential Election

Throughout the 2020 election campaign, there were increasing concerns about the spread of false information on social media, as well as discussions regarding the role of platforms in resolving information disorder (i.e., misinformation, dis-information and malinformation). Now that the election is over, we must evaluate the effectiveness of diverse strategies that platforms or media organizations have used, along with the associated ethical and legal ramifications, to address misinformation and disinformation during the election. The Information Society Project at Yale Law School invited leading scholars on misinformation from different disciplines— including communication, computer science, law, psychology and political science—to write about their reflections on important questions that were raised during the presidential campaign and the 2020 Election, particularly related to information disorder created and aggravated by algorithms on social media. You can find the essays below. The essays appear in the order in which they were presented at the Yale ISP conference this fall. Click here to watch the speaker presentations.

Misinformation research, four years later

Andrew Guess, assistant professor of politics and public affairs at Princeton University, considers the possibilities for research on misinformation in a post-Trump world.

Towards a diachronic understanding of the harm potential of information disorder — reflections on Election 2020

Sam Gill, Knight Foundation senior vice president and chief program officer, suggests that Election 2020 has thrown into relief the opportunity for a “diachronic” approach to the study of information disorder. This approach suggests focusing first on higher priority categories of harm stemming from information disorder, with a particular focus on the timing and the parties most affected.

News organizations as fact-checkers: Any potential issue?

Jisu Kim, a resident fellow at the Information Society Project at Yale Law School; and Soojong Kim, a postdoctoral fellow at Stanford PACS, examine perceptions regarding the source-target relationship on the perceived credibility of information and evaluations of targets in the context of news organizations and politicians. They explain that the perceived relationship between news organizations and political figures might not influence evaluations of the article, but may influence the public’s evaluations of political figures.

Social influence campaigns in the cyber information environment

Kathleen M. Carley is a Professor in the School of Computer Science at Carnegie Mellon University and the director of the center for Informed Democracy and Social-cybersecurity. She describes this newly emerging scientific field and discusses how social-cybersecurity methods were used to identify a number of influence campaigns aimed at impacting voters since the start of the pandemic.

Good-enough interventions

Lisa Fazio, an assistant professor of psychology at Vanderbilt University, argues that rather than hold out for the perfect solution to our current information disorder, we need to start implementing multiple flawed interventions. Borrowing the Swiss cheese metaphor from public health, she suggests that any single solution to misinformation will be imperfect, but by stacking multiple interventions, we can achieve excellent protection.

Facebook’s responsibility

Ethan Porter, an assistant professor at George Washington University, describes evidence indicating that factual corrections can reduce belief in misinformation shared on social media. One implication of this evidence, he argues, is that Facebook could be doing more than it is currently to reduce belief in misinformation.

Coordination: A prerequisite for an effective fight against misinformation

Valerie Belair-Gagnon, an assistant professor at the University of Minnesota-Twin Cities; Oscar Westlund, a professor at Oslo Metropolitan University; and Bente Kalsnes, an associate professor at Kristiania University College, explains that fragmented practices based on missions, interests and capabilities of organizations (e.g., fact-checkers, news organizations, and platform companies) underlie a larger issue: how can truth gain legitimacy if the institutions are fragmented and if there is limited coordination among actors? The larger institutional questions and power dynamics, which sociotechnical systems can help unpack, are ones that tech companies as arbiters of democracy and journalism and fact-checkers as civic gatekeepers will have to reckon with in concert with each other.

The road to hell is paved with good algorithms: The case for deactivating recommendation algorithms in the political sphere

Jonas Kaiser, an assistant professor at Suffolk University, faculty associate at the Berkman Klein Center for Internet & Society at Harvard University, and associate researcher at Humboldt Institute for Internet and Society — argues that recommendation algorithms on social media platforms are inherently flawed and should be removed in political contexts. Based on research ranging from computer science to communication science as well as his own research on YouTube’s algorithms, Kaiser suggests that recommendation algorithms can’t be fixed and are at odds with the public good. Kaiser thus proposes a radical solution: getting rid of recommendation algorithms in the political sphere.

Experimental evaluation of misinformation interventions

David G. Rand, professor of management science and brain and cognitive sciences at MIT, emphasizes the importance of having social media policy guided by actual experimental evidence rather than merely intuition. He describes how experiments show that intuitively appealing approaches (e.g. corrections based on fact-checking) may have unexpected downsides, and how counter-intuitive approaches (e.g. crowdsourced detection of misinformation and simple accuracy nudges) show promise.

A model for intuitive internet governance

Kate Klonick, assistant professor at St. John’s University Law School, presents a framework for understanding when and how much platforms should be expected to prioritize democratic values. Looking beyond the issues of misinformation and disinformation threats in the 2020 Election and pandemic, she frames platforms by size and product type in order to better understand when it is most critical to intervene to ensure accountability, transparency, participation and free expression, and what potential regulatory efforts might look like.

What can and should platforms be responsible for?

Daniel Kreiss, Cato Associate Professor at the Hussman School of Journalism and Media and Principal Researcher at the UNC Center for Information, Technology, and Public Life; and Bridget Barrett, Park Fellow at the Hussman School, argue that researchers need a robust understanding of the relationship between information disorder, platforms and democratic decay. They analyze several academic literatures to conceptualize how platforms may indirectly contribute to declines in political tolerance, the rise of anti-democratic political parties and figures, the erosion of faith in democracy and knowledge producing institutions, and the undermining of forms of political accountability.

The evolution of computational propaganda: Bots, influencers and platform responsibility

Samuel Woolley, program director of propaganda research at the Center for Media Engagement and assistant professor of Journalism and Media at UT Austin, argues that computational propaganda is moving away from impersonal, heavy-handed, manipulation tactics and toward more subtle, relationally-focused efforts. He describes how these increasingly personal and data-driven strategies are playing out across encrypted messaging applications, peer-to-peer text messaging and emerging digital communication spaces, and he discusses these platforms’ struggles to respond.

Disinformation gets physical: The internet of things as an emerging terrain

Laura DeNardis, professor in the School of Communication at American University and faculty director of the Internet Governance Lab, identifies the Internet of Things as the new frontier for disinformation. As the internet diffuses into the material world all around us, all of the policy problems in two-dimensional digital space have leapt into three-dimensional real world space, with physical implications for human safety and national security.

Regulating AI: The question now is no longer whether, but how

Olivier Sylvain, professor of law at Fordham University, suggests that automated decision making systems are pervasive and familiar enough now that policymakers are past the caricatured question of whether positive government intervention is prudent. As with all other legislative fields, policymakers today should now be defining the circumstances under which tailored regulations are appropriate.

The 2020 election integrity partnership

Jevin West, an associate professor in the Information School at the University of Washington and director of the Center for an Informed Public, describes the Election Integrity Project and some of the challenges going forward for monitoring, in real-time, misinformation and disinformation. He highlights two of those challenges with policy implications: repeat offenders of disinformation and the increased use of livestream video.

The emerging science of content labeling: “Soft” interventions and hard public problems

John P. Wihbey, a faculty affiliate with the Ethics Institute at Northeastern University and an assistant professor of journalism and media innovation, argues that content moderation efforts that give users greater context are key to an information future that both promotes democratic health and respects free speech. As social media platforms apply more cautionary or contextual labels to content, researchers must engage in more critical study of effects.

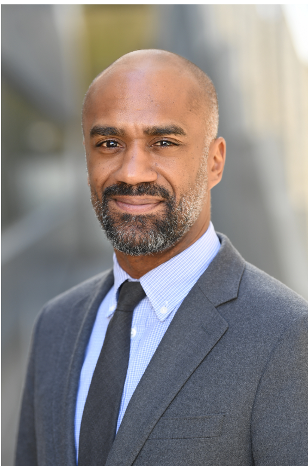

Race, misinformation, and voter depression

Guy-Uriel E. Charles is the Edward and Ellen Schwarzman Professor of Law at Duke Law School; and Mandy Boltax is a software engineer and second-year student at Duke Law, cautions against an overly-technocentric framing of information disorder—the intentional deployment of misinformation to influence electoral politics—and, argues misinformation, abetted by innovations in technology and social media, is novel only to the extent that it is the newest chapter in a long history of strategies designed to demobilize Black voters. Accordingly, it is imperative that scholars separate the role of race, the role of technology, and the role of politics in understanding race-based misinformation campaigns.

Don’t über-blame the algorithms — lessons from Europe

Amélie Heldt, researcher and doctoral candidate at the Leibniz-Institute for Media Research, argues that we should not focus on technology only when it comes to fixing the information disorder. Over the past four years, there was no evidence of successful disinformation campaigns against elections in Europe. Instead, we are witnessing a global rise of populism and polarization which should result in building technology with a stronger focus on diversity and civility.

Disinformation and the disingenuous discourse of victimhood

Ari Ezra Waldman, a professor of law and computer science at Northeastern University School of Law and Khoury College of Computer Sciences, argues that misinformation about nonexistent “fraud” in the 2020 election is part of a larger discursive and legal campaign among conservatives to situate themselves as “victims” in order to use U.S. law’s anticlassification principle to cement their power in society, permit discrimination against marginalized groups, and reconstitute a society in which law protected the rights of white, wealthy men, but denied those same rights to others.

Photo (top) by Johnson Wang on Unsplash