What types of misinformation do social media companies restrict? It’s complicated

A recent Gallup/Knight study found that three-quarters of Americans are very concerned about misinformation on the internet, but no clear sense of who bears responsibility for dealing with content that is misleading, harmful, or designed to interfere in the democratic process. While social media companies have historically been reluctant to play a heavy hand in policing content on their platforms, the COVID-19 crisis and the 2020 election season has brought renewed focus on their content moderation policies and practices.

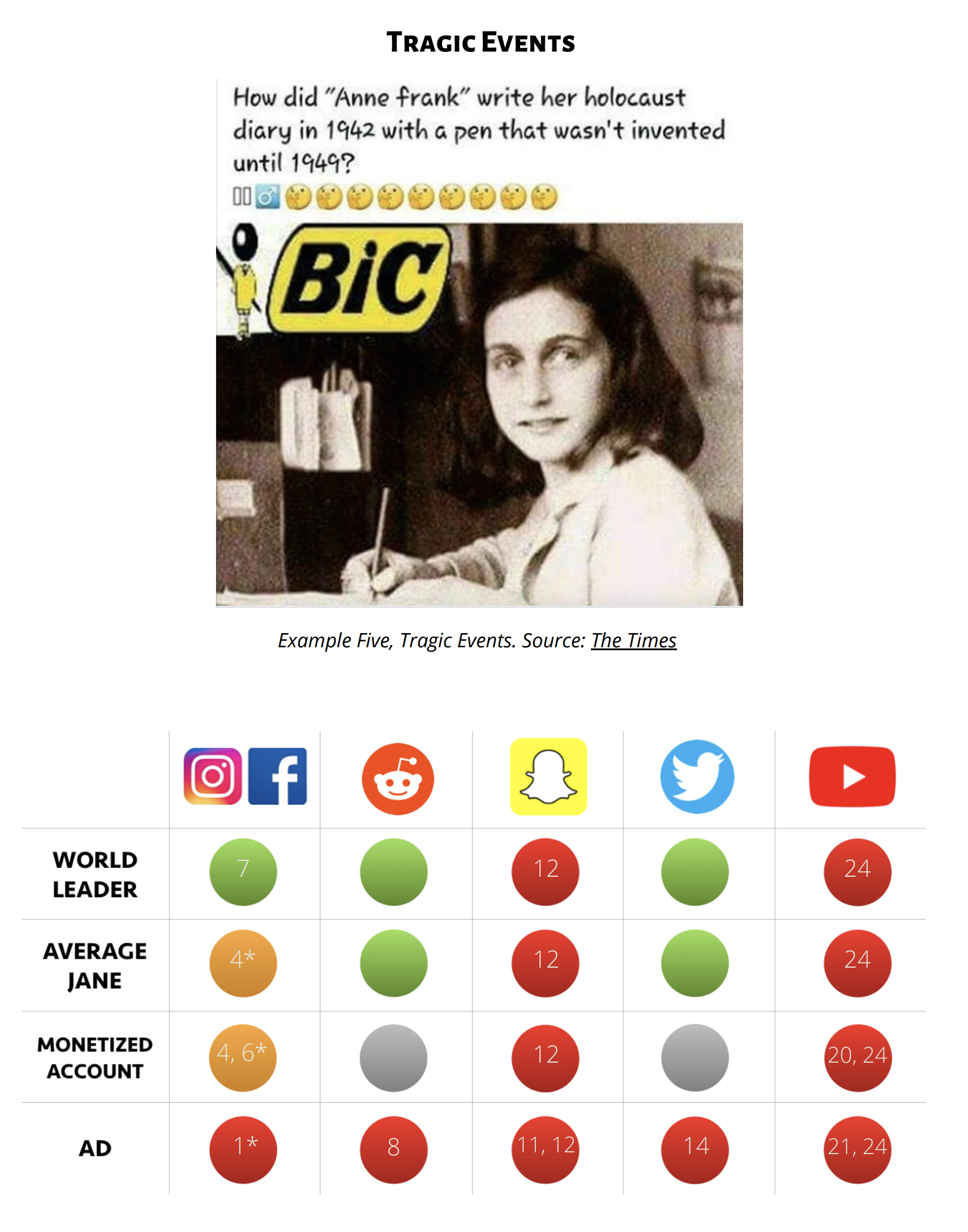

What are social media companies’ content policies and how are they implemented? A new report out of the Center for Information, Technology and Public Life at UNC systematically documents the various fact-checking and content moderation approaches of a range of social media companies. It also includes case studies that illustrate how these policies work in practice.

Download the complete study (PDF): Enforcers of the Truth: Social Media Platforms & Misinformation

The report found that social media platforms limit moderation to content likely to do the most harm, and that their strategies rely on the existence of established, uncontroversial facts agreed upon by knowledge-producing and verifying institutions.

“Increasingly, however, such agreement is often not the case,” the report notes.

The Knight-supported Center for Information, Technology and Public Life (CITAP) at the University of North Carolina at Chapel Hill is dedicated to researching, understanding and responding to the growing impact of the internet, social media, and other forms of digital information sharing.

Image (top) by Anas Alshanti on Unsplash.